Software augmented testing grounded in your drive logs.

Real-fidelity digital twins and deterministic simulation to confidently test and validate ADAS and AV systems continuously through development.

Challenges with AV and ADAS Perception Development

Safe, Reliable Perception

Edge Cases – Unique scenarios are critical for model accuracy and safety, but rarely seen in the real-world and often unsafe or impossible to capture

Observability – Changes to a perception model can have unintended consequences that are not observable until tested in the real-world

Safety – Crashes can lead to litigation, regulatory scrutiny, and diminish brand perception

Solution – Continuously evaluate both standard and edge-case scenarios in a virtual world designed to mimic the real-world

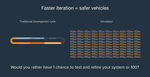

Time and Cost

Collection – It takes months to collect, label, and QA real sensor data

Evaluation – It takes weeks to test in the real-world

Staffing a team to capture, label, curate, QA, and field-test is expensive

Solution – Generate the scenarios, test suites, and datasets you need in days, not months

Scalability across regions and hardware

New vehicle rigs and sensor types require recapturing sensor data, labeling, curation, and QA

Entering new geographic markets requires new data and overcoming regulatory hurdles

Regulation is constantly changing and evolving requiring rework to perception models

Solution – Simulate new camera configurations, enviornments, and scenarios by updating code, not recapturing data

Parallel Domain Solutions

Evaluate

- Software-in-the-loop, closed-loop, and open-loop testing integration

- Observability - Nightly regression testing to monitor perception performance changes

- Perception unit testing (edge cases, weather, lighting, regional differences)

- Near validation testing in real-world location scans (PD Replica)

Analyze

- Benchmark against pre-defined or custom scenarios and sensor rigs, covering new regulations, accident data, locations, etc.

- Explore sensor rig selection, rig configurations, model selection

Train

- Scaled data generation for building or augmenting training data sets

- Simulate in real-world location scans (PD Replica)

- Build data sets covering infinite variations of edge cases, weather, geographic differences, lighting, sensor noise, etc

PD Replica - Closing the sim-to-real gap with real environments

Incorporate real-world scans as fully annotated, simulation-ready environments seamlessly integrated into Parallel Domain’s Data Lab API. Experience unparalleled variety and realism for model testing, training, and validation

Benefits

Save time and money while operating at scale

Access new geographic regions and hardware configurations

Develop safe and reliable AI-driven systems