Boost Optical Flow with PD Data

Camille Ballas, Michael Stanley, Phillip Thomas, Lars Pandikow, Michael Galarnyk

Optical flow is defined as the task of estimating per-pixel motion between video frames. Optical flow models take two sequential frames as input and return as output a flow vector that predicts where each pixel in the first frame will be in the second frame. Optical flow is an important task for autonomous driving, but real-world flow data is very hard to label. For humans, it can actually be impossible to label. Labeling can only be done using LiDAR information to estimate object motion, whether dynamic or static, from the ego trajectory. Because LiDAR scans are inherently sparse, the very few public optical flow datasets are also sparse. A way around this problem is to use synthetic data, where dense flow labels are readily available. This post goes over how synthetic data can improve optical flow tasks and how tuning Parallel Domain’s synthetic data to mitigate important domain gaps can lead to major performance improvements.

How Synthetic Data can Improve Optical Flow Tasks

Comparing the optical flow labels of the real-world data KITTI (left) and the synthetic data Parallel Domain (right). Color indicates the direction and magnitude of flow.

A major strength of synthetic data is that you can generate as much perfectly labeled data as you like. Synthetic optical flow data is accurate and can have dense optical flow labels so that every pixel has a flow label. One major advantage of using synthetic data is that machine learning practitioners can iterate not just on loss or architecture, but also the datasets themselves. This means that scenes, maps, scenarios, sensors, and flow magnitudes can be quickly tailored.

Commonly used Synthetic Optical Flow Datasets

Because there is so little real optical flow data, researchers typically pretrain their models on synthetic flow datasets with non-commercial license agreements like Flying Chairs (FC) [1] and Flying Things (FT) [2]. Note that neither of these datasets emulate autonomous vehicle scenes and both contain surreal elements (e.g., random backgrounds from Flickr, CGI foreground objects) placed and rotated randomly to offer a wide variety of optical flow motions. To fine-tune their models for autonomous vehicle applications, researchers use a real-world dataset like KITTI-flow (2015 version) [3] which contains only 200 training samples with another 200 test samples hidden behind an inaccessible test server. This dataset is one of the few publicly available optical flow dataset for autonomous driving and is used extensively to publish results and benchmarks.

Commonly used Training Practices with Synthetic Optical Flow Data

Despite their surreal elements, the synthetic datasets FC and FT are highly useful [4] and improve on models trained with only the little real-world data available. Thus, they are widely used as part of the standard optical flow training pipeline:

- Pretrain with 1.2M iterations on Flying Chairs

- Pretrain with 600k iterations on Flying Things

- Fine-tune 300k iterations on KITTI

For the real dataset KITTI, it is common to train on all 200 and publish results on those same 200 samples, but that is not a good measure of how well the model performs on new data. Our results in this post are based on training on 100 samples and evaluating model performance on the other 100.

Optical flow tasks are often evaluated with End Point Error (EPE). This metric is the magnitude of difference (Euclidean distance) between the ground truth and predicted flow vectors for each pixel.

Performance Improvements using Parallel Domain’s Synthetic Data

At Parallel Domain, we sought to improve the standard optical flow pipeline by adding in domain specific synthetic data for autonomous driving. By using and iterating on our synthetic data, we were able to improve EPE on optical flow tasks by 18.5% largely through enhancing flow magnitude. This section describes how we integrated PD data into optical flow pipelines, identified flow magnitude as an important domain gap, and subsequently addressed that domain gap with PD data.

Parallel Domain Dataset

In response to the problems when using the standard optical flow pipeline (e.g., no domain specific synthetic datasets, very small real-world dataset), we generated a range of synthetic data for autonomous driving in different locations, scenarios (e.g. highway vs. urban, creeping datasets), and frame rates to augment the diversity of flow data available while being domain specific.

We added this data as a third pretraining step to form a new optical flow training pipeline:

- Pretrain with 1.2M iterations on Flying Chairs

- Pretrain with 600k iterations on Flying Things

- Pretrain with 600k iterations on Parallel Domain data

- Fine-tune 300k iterations on KITTI

For these experiments, we used an off-the-shelf PWC-Net architecture [5].

Flow Magnitude Alignment Problem

The initial addition of the Parallel Domain Dataset to the standard optical flow pipeline improved EPE by 6%, however we wanted to push that number further. A major advantage of Parallel Domain’s synthetic data is that it is easy to improve the data through iteration. We previously mentioned in our synthetic data best practices blog that good synthetic data should visually resemble real-world sensor data and labels. This is important to ensure generalization [4]. The data should also reflect a distribution of locations, textures, lighting, backgrounds, objects, and agents (e.g., vehicles or pedestrians) similar to what a model will encounter in real-world situations.

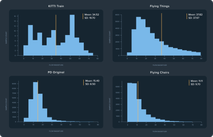

One of our team members located in Karlsruhe, Germany pointed out that some of the KITTI highways scenes are on the German Autobahn, where vehicles drive very fast. This contrasts with our own generated highway scenes located in the US, where vehicles drive at a lower speed. The difference in speed between the KITTI dataset and Parallel Domain’s data is reflected in the distribution of flow magnitude (the length of a flow vector) histograms below. We can observe that for the KITTI dataset (KITTI Train), the mean and standard deviation flow is much higher than the PD data (PD Original). For reference, we also included the synthetic datasets FT and FC (2nd column); they encompass a wide distribution of flow indicating a potential gap in our initial PD data.

Flow Magnitude Alignment Solution

To reduce the flow magnitude domain gap, we reduced the frame rate sampling for some of our Parallel Domain data. This increased the flow magnitude between frames as the motion between two frames became larger. We can see that our flow distribution has improved (PD High Flow), covering a wider range of flow magnitudes.

A Major Performance Improvement using Parallel Domain

After we tested the pure synthetic data pipeline, we added the KITTI dataset back into the optical flow training pipeline:

- Pretrain with 1.2M iterations on Flying Chairs

- Pretrain with 600k iterations on Flying Things

- Pretrain with the updated Parallel Domain data – PD High Flow

- Fine-tune 300k iterations on KITTI

When we trained using PD data with a wider distribution of flow magnitude, the EPE dropped by 18.5% compared to the baseline. This result held when we doubled the FT training time so that both models are trained with the same number of iterations. This result confirms that performance improves when using Parallel Domain data to pretrain optical flow models, and that flow distribution matters. We were able to significantly push our performance further by reducing the frame rate in our generated data. This makes sense as the highest magnitude pixels in optical flow are typically in the surrounding rails on the sides of the frame.

The largest motions are not usually in relation to the vehicles in front of the ego vehicle, but rather the vehicles on the side. By skipping frames we were able to accentuate the motion between pixels, thus improving our overall performance. This dataset tuning would not have been possible if it were not for synthetic data that allowed us to easily iterate and tune labels accordingly.

| Baseline | With PD Original | With PD High Flow | Synthetic only |

|---|---|---|---|

| FC-FT-KITTI | FC-FT-PD -KITTI | FC-FT-PD-PDH-KITTI | FC-FT-PDH |

| 3.37 | 3.167 | 2.749 | 3.282 |

End-Point-Error (EPE) score for the different combinations of datasets. Note that results are reported using our 100 train – 100 validation split. See our <a target=”_blank” rel=”noopener” href=”https://api.wandb.ai/links/paralleldomain/5wtf9cp6″>W&B report</a> for further details.

A Pure Synthetic Data Pipeline Resulted in a Minor Performance Improvement

After reducing the frame rate on some of our Parallel Domain data, we tried a purely synthetic pipeline that didn’t involve fine-tuning on KITTI:

- Pretrain with 1.2M iterations on Flying Chairs

- Pretrain with 600k iterations on Flying Things

- Train with Parallel Domain data

Surprisingly, the model trained only on synthetic data outperformed the real model without PD data by 2.5%! If you would like to learn more about training optical flow models with synthetic data, you can check out our documentation.

Conclusion

Optical flow is an important task for autonomous driving, but a major problem is that there is so little real-world optical flow data. This shortage creates a need for synthetic data. In this post, we showed how Parallel Domain’s synthetic data improved optical flow performance by 18.5% by enabling a large distribution of flow magnitude (per pixel motion). An important reason why this was possible was that we were able to iterate on our synthetic data to create the best possible dataset with little to no cost, as labels can be easily generated. If you’re interested in more experimental results, check out our detailed write-up on our Weights and Biases report. If you are training optical flow models and want to see how much Parallel Domain’s synthetic data can improve your models, let’s have a conversation!

References

[1] Alexey Dosovitskiy, Philipp Fischer, Eddy Ilg, Philip Hausser, Caner Hazirbas, Vladimir Golkov, Patrick van der Smagt, Daniel Cremers, Thomas Brox. (2015). FlowNet: Learning Optical Flow with Convolutional Networks. In IEEE International Conference on Computer Vision (ICCV)

[2] Nikolaus Mayer, Eddy Ilg, Philip Häusser, Philipp Fischer, Daniel Cremers, Alexey Dosovitskiy, and, Thomas Brox. (2016). A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR)

[3] Moritz Menze, and Andreas Geiger. (2015). Object Scene Flow for Autonomous Vehicles. In IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR)

[4] Nikolaus Mayer, Eddy Ilg, Philipp Fischer, Caner Hazirbas, Daniel Cremers, Alexey Dosovitskiy, and Thomas Brox. (2018). What Makes Good Synthetic Training Data for Learning Disparity and Optical Flow Estimation?. In International Journal of Computer Vision (IJCV)

[5] Deqing Sun, Xiaodong Yang, Ming-Yu Liu, and Jan Kautz. (2017). PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume. In IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR)